Does Google’s TPU Investment Make Sense Going Forward?

- by 7wData

Google created quite a stir when it released architectural details and performance metrics for its homegrown Tensor Processing Unit (TPU) accelerator for machine learning algorithms last week. But as we (and many of you reading) pointed out, comparing the TPU to earlier “Kepler” generation GPUs from Nvidia was not exactly a fair comparison. Nvidia has done much in the “Maxwell” and “Pascal” GPU generations specifically to boost machine learning performance.

To set the record straight, Nvidia took some time and ran some benchmarks of its own to put the performance of its latest Pascal accelerators, particularly the ones it aims at machine learning, into perspective, and it shows that the GPU can hold its own against Google’s TPU when it comes to machine learning inference with the added benefit of also being useful for the training of the neural networks that underlie machine learning frameworks. The additional benchmarks also illustrate how hard – and perhaps risky – it is is to make a decision to build a custom chip for machine learning when GPUs are on an annual cadence to boost the oomph. Google made a decision at a point in time to do a better job on machine learning inference, and not coincidentally, so did Nvidia.

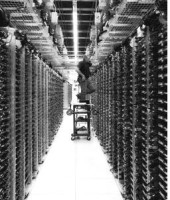

As we explained in our coverage on Google’s TPU architecture and its performance results, there is no question that the TPU is a much better accelerator supporting Google’s TensorFlow framework for machine learning when used to run inferencing – which means taking the trained neural network and pushing new data through it in a running application – compared to a single 18-core “Haswell” Xeon E5-2699 v3 processor running at 2.3 GHz or a single Kepler GK210B GPU with 2,496 cores running at their baseline 560 MHz. While the Haswell can support 8-bit integer operations, which is what the TPU uses for inferencing, as well as 64-bit double precision and 32-bit single precision floating point (which can also be used for neural nets but which have diminished performance on machine learning training and inferencing), the higher bitness of the Haswell Xeon did not have a very high throughput in integer mode and could not match the batch size or throughput of the TPU in floating point mode. And a single Kepler GK210B GPU chip, which did not have 8-bit integer support at all and only 32-bit and 64-bit floating point modes, was similarly limited in throughput, as this summary table showed:

The Haswell E5 could do 2.6 tera operations per second (TOPS) using 8-bit integer operations running Google’s inferencing workload on its TensorFlow framework, while a single K80 die was capable of supporting 2.8 TOPS. The TPU did 92 TOPS on the inferencing work, blowing the CPU and GPU out of the water by a factor of 35.4X and 32.9X, respectively.

Benchmark tests are always welcomed, of course. And as Google knows full well, more data is always a wonderful thing. But the Keplers are two generations behind the current Pascals and this was not precisely a fair comparison to the GPUs, even if it does show the comparisons that Google must have been making when it decided to move ahead with the TPU project several years ago.

“We want to provide some perspective on Kepler,” Ian Buck, vice president of accelerated computing at Nvidia, tells The Next Platform. “Kepler was designed back in 2009 to solve traditional HPC problems, and was the architecture used in the “Titan” supercomputer at Oak Ridge National Laboratory. The K80 was one of many Kepler products that we built. The original research in deep learning on GPUs hadn’t even been attempted back when Kepler was designed. As soon as people started using GPUs for artificial intelligence, we started to optimize for both HPC and AI, and you can see that in the results we have had. For instance, the Tesla P40 using our Pascal GPU came out in 2016 just after the TPU was able to achieve 20X the AI deep learning performance than what we could do with K80.

[Social9_Share class=”s9-widget-wrapper”]

Upcoming Events

Evolving Your Data Architecture for Trustworthy Generative AI

18 April 2024

5 PM CET – 6 PM CET

Read MoreShift Difficult Problems Left with Graph Analysis on Streaming Data

29 April 2024

12 PM ET – 1 PM ET

Read More