Neuromorphic computing finds new life in machine learning

- by 7wData

Efforts have been underway for forty years to build computers that might emulate some of the structure of the brain in the way they solve problems. To date, they have shown few practical successes. But hope for so-called neuromorphic computing springs eternal, and lately, the endeavor has gained some surprising champions.

The research lab of Terry Sejnowski at The Salk Institute in La Jolla this year proposed a new way to train "spiking" neurons using standard forms of machine learning, called "recurrent neural networks," or "RNNs."

And Hava Siegelmann, who has been doing pioneering work on alternative computer designs for decades, proposed along with colleagues a system of spiking neurons that would perform what's called "unsupervised" learning.

Neuromorphic computing is an umbrella term given to a variety of efforts to build computation that resembles some aspect of the way the brain is formed. The term goes back to work by legendary computing pioneer Carver Mead in the early 1980s, who was interested in how the increasingly dense collections of transistors on a chip could best communicate. Mead's insight was that the wires between transistors would have to achieve some of the efficiency of the brain's neural wiring.

There have been many projects since then, including work by Winfried Wilcke of IBM's Almaden Research Center in San Jose, as well as The TrueNorth chip effort at IBM; and the Loihi project at Intel, among others. ZDNet's Scott Fulton III had a great roundup earlier this year of some of the most interesting developments in neuromorphic computing.

So far, such projects have yielded little practical success, leading to tremendous skepticism. During the International Solid State Circuits Conference in San Francisco, Facebook's head of A.I. research, Yann LeCun, gave a talk on trends in chips for deep learning. He was somewhat dismissive of work on spiking neural nets, prompting a backlash later in the conference by Intel executive Mike Davies, who runs the Loihi project. Davies's riposte to LeCun then prompted LeCun to make another broadside against spiking neurons on his Facebook page.

"AFAIK, there has not been a clear demonstration that networks of spiking neurons (implemented in software or hardware) can learn a complex task," said LeCun. "In fact, I'm not sure any spiking neural net has come even close to state-of-the-art performance from garden-variety neural nets."

But Sejnowski's lab and Siegelmann's team at the Defense Advanced Research Projects Agency's Biologically Inspired Neural and Dynamical Systems Laboratory provide new hope.

Sejnowski, during a conversation with ZDNet at the Salk Institute in April, predicted a major role for spiking neurons in future.

"There's going to be another big shift, which will probably occur within the next five to ten years," said Sejnowski.

"The brain is incredibly efficient, and one of the things that makes it efficient is because it uses spikes," observed Sejnowski. "If anyone can get a model of a spiking neuron to implement these deep nets, the amount of power you need would plummet by a factor of a thousand or more. And then it would get sufficiently cheap that it would be ubiquitous, it would be like sensors in phones."

Hence, Sejnowski thinks spiking neurons can be a big boost to inference, the task of making predictions, on energy-constrained edge computing devices such as mobile phones.

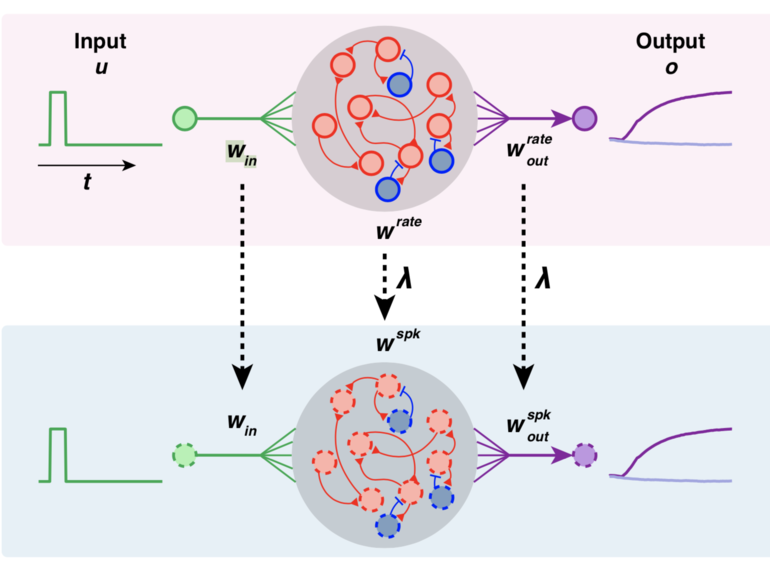

The work by Sejnowski's lab, written by Robert Kim, Yinghao Li, and Sejnowski, was published in March. Titled, "Simple Framework for Constructing Functional Spiking Recurrent Neural Networks," the research, posted on the Bioarxiv pre-print server, describes training a standard recurrent neural network, or "RNN," and then transfers those parameters to a spiking neural network.

[Social9_Share class=”s9-widget-wrapper”]

Upcoming Events

Evolving Your Data Architecture for Trustworthy Generative AI

18 April 2024

5 PM CET – 6 PM CET

Read MoreShift Difficult Problems Left with Graph Analysis on Streaming Data

29 April 2024

12 PM ET – 1 PM ET

Read More