Data science without statistics is possible, even desirable

- by 7wData

The purpose of this article is to clarify a few misconceptions about data and statistical science.

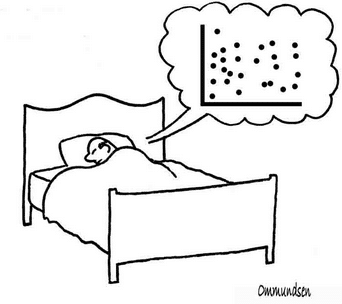

I will start with a controversial statement: data science barely uses statistical science and techniques. The truth is actually more nuanced, as explained below.

But the new statistical science in question is not regarded as statistics, by many statisticians. I don't know how to call it, "new statistical science" is a misnomer, because it is not all that novel. And it is regarded by statisticians as dirty data processing, not elegant statistics.

It contains topics such as

I have sometimes used the word rebel statistics to describe these methods.

While I consider these topics to be statistical science (I contributed to many of them myself, and my background is in computational statistics), most statisticians I talked to do not see it as statistical science. And calling this stuff statistics only creates confusion, especially for hiring managers.

Some people call it statistical learning. One of the precursors of this type of methods is Trevor Hastie who wrote one of the first data science books, called The Elements of Statistical Learning.

2. Data science uses a bit of old statistical science

Including the following topics, which curiously enough, are not found in standard statistical textbooks:

These techniques can be summarized in one page, and time permitting, I will write that page and call it "statistics cheat sheet for data scientists". Interestingly, from a typical 600-pages textbook on statistics, about 20 pages are relevant to data science, and these 20 pages can be compressed in 0.25 page. For instance, I believe that you can explain the concept of random variable and distribution (at least what you need to understand to practice data science) in about 4 lines, rather than 150 pages. The idea is to explain it in plain English with a few examples, and defining distribution as the expected (based on model) or limit of a frequency distribution (histogram).

Funny fact: some of these classic stats texbooks still feature tables of statistical distributions in an appendix. Who still use such tables for computations? Not a data scientist, for sure. Most programming languages offer libraries for these computations, and you can even code it yourself in a couple of lines of code. A book such as numerical recipes in C++ can prove useful, as it provides code for many statistical functions; see also our source code section on DSC, where I plan to add more modern implementations of statistical techniques, some even available as Excel formulas.

In particular, OLS (ordinary least squares) , Monte-Carlo techniques, mathematical optimization, the simplex algorithm, inventory and pricing management models.

[Social9_Share class=”s9-widget-wrapper”]

Upcoming Events

From Text to Value: Pairing Text Analytics and Generative AI

21 May 2024

5 PM CET – 6 PM CET

Read More

One thought on “Data science without statistics is possible, even desirable”

This blog was debunked … thoroughly. It is ridiculous. E.g., it fails to name a single technique for data analysis, which is not statistics. The author makes glaring errors in regards to the nature of statistics and the techniques.